Building AI Powered Moderation for UGC Platforms

Implementing AI-Powered Moderation Systems

Key Benefits of AI-Powered Moderation

Implementing AI-powered moderation systems offers a multitude of advantages over traditional human-based approaches. These systems can significantly reduce moderation time, freeing up human moderators to focus on more complex or nuanced issues. The ability to process large volumes of user-generated content (UGC) at scale is crucial for platforms handling massive amounts of data, enabling faster response times and preventing the spread of harmful content. Furthermore, AI can consistently apply moderation policies, minimizing bias and ensuring a more equitable experience for all users.

AI-powered moderation also provides valuable data insights. By analyzing patterns in reported content, these systems can identify emerging trends and potential issues before they escalate. This proactive approach allows for the development of more effective moderation strategies and the improvement of platform policies over time. In addition, AI can learn and adapt to changing user behavior and content trends, ensuring the moderation system remains effective even as the platform evolves.

Challenges in Implementing AI-Powered Moderation

While AI-powered moderation systems offer significant advantages, there are also inherent challenges to consider. One crucial aspect is the potential for bias in the training data, which can lead to discriminatory outcomes in the moderation process. Careful selection and curation of training data are essential to mitigate this risk and ensure fairness and equity. Another significant challenge is the need for ongoing monitoring and refinement of the AI models to adapt to evolving content creation techniques and new forms of harmful behavior.

Ensuring the accuracy and reliability of AI-powered moderation systems is paramount. False positives, where legitimate content is incorrectly flagged as harmful, can lead to user frustration and account suspension. Robust testing and validation procedures are necessary to minimize the impact of these errors. Furthermore, the ethical implications of AI-powered moderation, including transparency, accountability, and user privacy, require careful consideration and proactive measures to mitigate potential risks.

Specific AI Techniques for Content Moderation

Various AI techniques can be employed for content moderation, each with its own strengths and weaknesses. Natural Language Processing (NLP) is a key technique for understanding and interpreting text, enabling AI systems to identify hate speech, harassment, or misinformation. Machine learning algorithms, such as deep learning models, can be trained on large datasets of labeled content to identify patterns and classify new content. These models are particularly effective in recognizing nuanced and complex forms of harmful content, such as subtle expressions of bias or targeted harassment.

Beyond text, image and video moderation also require advanced AI techniques. Computer vision algorithms can be utilized to identify and classify inappropriate images or videos. These algorithms can detect elements such as nudity, violence, or graphic content, allowing for efficient and comprehensive moderation across various media types. The combination of NLP and computer vision techniques provides a more comprehensive approach to content moderation, addressing the diverse nature of UGC.

Integrating AI into Existing Moderation Infrastructure

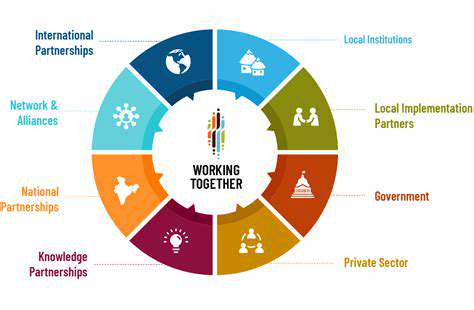

Integrating AI-powered moderation systems into existing infrastructure requires careful planning and execution. Platforms need to consider the scalability of their current systems to handle increased data volumes. Data pipelines must be optimized to efficiently feed content to the AI models, ensuring real-time processing where necessary. Furthermore, clear workflows need to be established for the AI-based moderation system to work in conjunction with human moderators, ensuring a smooth transition and efficient handling of various content types.

Transitioning to AI-powered moderation requires a phased approach. Starting with pilot programs and gradually expanding the scope of AI involvement can help identify potential issues and optimize the system's performance before full-scale implementation. Regular monitoring and evaluation of the system's effectiveness are crucial to identify areas for improvement and adaptation. This iterative approach allows for continuous refinement and optimization of the AI-powered moderation infrastructure.