The Role of AI in Identifying Ethical Risks

Adopting Eco-Friendly Technologies Enhances Brand Reputation

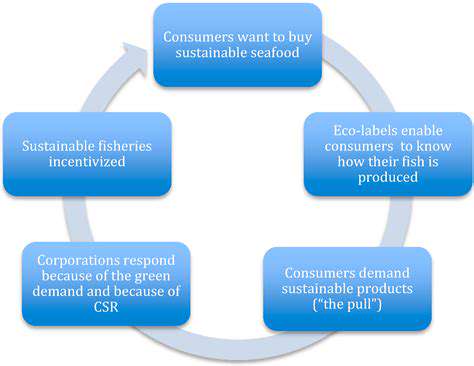

Companies today recognize that integrating Eco-Friendly Technologies isn't just about environmental responsibility—it's a powerful business strategy. When organizations transition to renewable energy sources and implement waste reduction systems, they're not only reducing their carbon footprint but also making a statement about their values. Consumers increasingly vote with their wallets, favoring brands that demonstrate genuine commitment to sustainability. This shift in consumer behavior has transformed green initiatives from optional PR moves to essential components of corporate strategy.

The financial benefits of sustainable practices often surprise executives. While initial investments may seem substantial, the long-term savings from reduced energy consumption and streamlined operations frequently outweigh the costs. Many forward-thinking companies now incorporate sustainability metrics into executive performance reviews, signaling that environmental stewardship directly correlates with career advancement. What began as compliance measures have evolved into competitive advantages that drive innovation and market differentiation.

The Role of Consumer Awareness and Regulatory Pressures

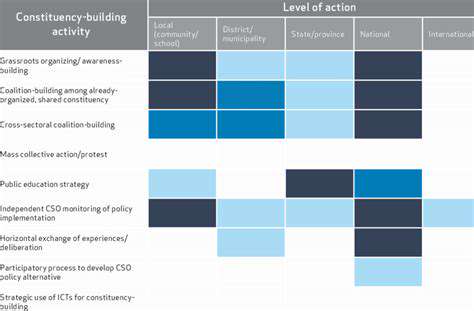

Today's consumers aren't just passively accepting corporate claims—they're demanding proof. The rise of eco-conscious shopping has created an entire industry around sustainability verification, from carbon footprint audits to supply chain transparency reports. Companies that can't provide verifiable data about their environmental impact risk losing market share to competitors who can. Social media has amplified this effect, turning every sustainability misstep into potential viral backlash.

Governments worldwide are responding to public pressure with increasingly stringent regulations. What began as voluntary guidelines are hardening into mandatory requirements with real financial consequences for non-compliance. The smartest organizations aren't just meeting current standards—they're anticipating future regulations and positioning themselves as industry leaders in sustainability. This proactive approach not only avoids penalties but often reveals new business opportunities in emerging green markets.

Leveraging Machine Learning Algorithms to Detect Bias and Discrimination

Understanding the Foundations of Machine Learning Techniques

The field of Machine Learning continues to evolve at a breathtaking pace, but its core principles remain rooted in pattern recognition. These systems don't think in human terms—they identify statistical relationships in data that humans might miss. The art of machine learning lies not in the algorithms themselves, but in knowing which tool to use for each unique problem. Seasoned data scientists often compare their work to carpentry—having the right tool for the job makes all the difference.

Different Algorithms serve distinct purposes, much like different medical tests diagnose various conditions. Classification algorithms sort data into categories, while regression models predict numerical values. The most effective practitioners develop an intuition for matching problem types with appropriate methodologies. This expertise becomes particularly valuable when dealing with incomplete or noisy datasets where textbook solutions might fail.

Implementing Machine Learning in Real-World Applications

The journey from theoretical model to practical implementation resembles translating a scientific discovery into a usable product. Data preparation—often the most time-consuming phase—can make or break a project. Cleaning datasets requires both technical skill and domain expertise to recognize meaningful patterns versus statistical noise.

Model selection represents another critical decision point. While neural networks dominate headlines for their impressive capabilities, simpler models often outperform them in specific applications where interpretability matters. The best solutions frequently combine multiple approaches, leveraging their respective strengths while mitigating weaknesses. Effective implementation requires constant iteration, with real-world feedback refining models beyond their initial training.

Overcoming Challenges and Ethical Considerations

Machine learning's potential comes with significant responsibility. The same algorithms that can detect disease patterns in medical images might inadvertently discriminate if trained on biased datasets. Ethical machine learning requires vigilance at every stage—from data collection to model deployment—to prevent amplifying societal inequalities. Many organizations now employ red teams specifically tasked with identifying potential harms before systems go live.

The environmental impact of large models presents another growing concern. Training sophisticated algorithms consumes enough energy to power small towns, creating tension between technological progress and sustainability goals. Some researchers advocate for green AI approaches that prioritize efficiency without sacrificing performance. As the field matures, balancing these competing priorities will become increasingly important.

The Use of Natural Language Processing to Uncover Privacy and Ethical Concerns

Natural Language Processing and Privacy

Natural Language Processing (NLP) systems face unique privacy challenges because they inherently process human communication—our most personal form of expression. Unlike structured data, language contains subtle cues about individuals' identities, emotions, and relationships. Protecting privacy in NLP requires more than just anonymizing names—it demands understanding how combinations of seemingly innocuous details can reveal identities.

Ethical Considerations in NLP Applications

The ethical landscape of NLP resembles a minefield of unintended consequences. Systems trained on historical texts often inherit outdated perspectives, potentially reinforcing harmful stereotypes. Responsible NLP development requires active measures to identify and counteract these inherited biases, not just technically but philosophically. Some organizations now employ ethicists alongside engineers to navigate these complex issues.

Identifying and Mitigating Bias in NLP Models

Bias detection in NLP models has evolved into a sophisticated discipline of its own. Techniques range from statistical analyses of output distributions to qualitative examinations of edge cases. The most effective approaches combine automated testing with human review, recognizing that some forms of bias only become apparent through lived experience.

The Role of Transparency and Explainability in NLP

As NLP systems influence more areas of life—from loan approvals to medical diagnoses—their opacity becomes increasingly problematic. Explainable AI techniques attempt to bridge this gap, offering glimpses into the black box without oversimplifying complex systems. The challenge lies in providing meaningful explanations to non-technical users while maintaining model accuracy.

Data Security and Privacy in NLP Systems

NLP systems handling sensitive information require security measures beyond standard IT practices. Differential privacy techniques, federated learning architectures, and strict access controls form multiple layers of defense against data breaches. The most secure systems design privacy protections into their core architecture rather than adding them as afterthoughts.

The Impact of NLP on Human-Computer Interaction

NLP's ability to simulate human conversation creates both opportunities and risks. While more natural interfaces improve accessibility, they also raise questions about emotional manipulation and informed consent. Clear boundaries must distinguish human from machine interactions, especially in sensitive domains like mental health support.

Future Directions and Research in Ethical NLP

The frontier of ethical NLP research explores fascinating questions: How can models respect cultural context without stereotyping? What constitutes informed consent for data used in training? Answering these questions requires collaboration across disciplines—linguistics, philosophy, law, and computer science—to develop frameworks that keep pace with technological advancement.

Challenges and Future Directions in AI-Driven Ethical Risk Detection

Addressing Technological Limitations

The race to develop more sophisticated ethical AI tools faces fundamental constraints. Current Technological Limitations create bottlenecks in processing power, data quality, and algorithmic complexity. Breakthroughs in quantum computing and neuromorphic chips may eventually overcome these barriers, but interim solutions require creative engineering. Some researchers advocate for good enough systems that deliver practical value today while laying foundations for tomorrow's advancements.

Ensuring Ethical and Social Responsibility

The ethical dimensions of AI risk detection present paradoxes. Systems designed to identify bias might themselves contain hidden biases. Regulations intended to prevent harm could inadvertently stifle innovation. The path forward likely involves adaptive frameworks that evolve alongside the technology they govern, with mechanisms for continuous stakeholder input. International cooperation will be essential, as ethical standards vary significantly across cultures.

Fostering Interdisciplinary Collaboration

The most promising developments in ethical AI emerge from unexpected intersections—computer scientists working with anthropologists, engineers collaborating with ethicists. True innovation happens when diverse perspectives challenge assumptions and reveal blind spots in conventional approaches. Creating spaces for these collaborations—both physical and virtual—will be crucial for solving the field's most intractable problems.