Overcoming Bias in AI Generated Entertainment Content

Data Quality and Representation

Ensuring data quality is paramount for effective model training. Inaccurate, incomplete, or biased data can lead to flawed models, ultimately impacting the accuracy and reliability of predictions. Thorough data cleaning, validation, and preprocessing steps are essential to identify and address inconsistencies, outliers, and missing values. This process involves techniques like handling missing data, transforming variables, and removing duplicates, all contributing to a more robust dataset.

Furthermore, the representation of various groups and characteristics within the data is crucial. Models trained on data that lacks diversity risk perpetuating existing biases and failing to generalize effectively to different populations. Careful consideration of demographic, geographic, and other relevant factors is essential to ensure a comprehensive and representative dataset.

Model Selection and Architecture

Choosing the appropriate machine learning model is critical for optimal performance. Different models are suited to different types of data and tasks. For example, linear regression models are effective for predicting continuous variables, while decision trees can handle both continuous and categorical variables with relative ease. Careful consideration of the specific problem and the characteristics of the data is essential.

The architecture of the model, including the number of layers, neurons, and activation functions, also significantly impacts performance. Experimentation with different architectures and hyperparameters is often necessary to achieve optimal results. This process may involve techniques such as cross-validation and grid search to evaluate different configurations and identify the best-performing model.

Training Strategies and Optimization

Effective training strategies are vital for achieving optimal model performance. Choosing the right learning rate is essential to avoid oscillations and ensure the model converges to a stable solution. Different optimization algorithms, such as stochastic gradient descent, Adam, or RMSprop, can impact the training process. The selection of an appropriate algorithm depends on the specific characteristics of the model and the data.

Monitoring the training process is crucial to identify potential issues and ensure the model is learning effectively. Metrics like loss functions and accuracy should be tracked throughout the training process. Regular evaluation and adjustments to the training parameters are necessary to prevent overfitting or underfitting, ensuring the model generalizes well to unseen data.

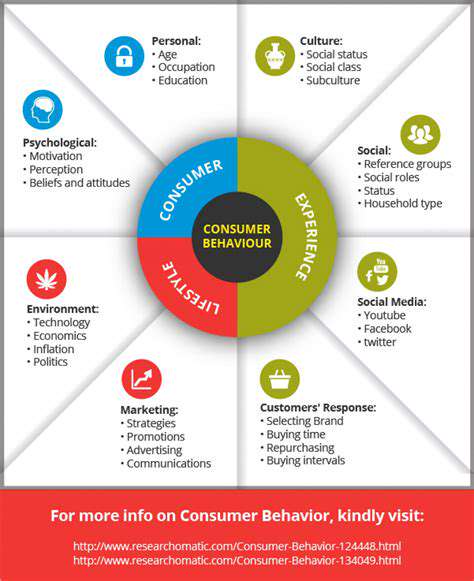

Bias Mitigation and Fairness

Addressing potential biases within the data and the model is essential to ensure fairness and equitable outcomes. Data preprocessing techniques can help mitigate biases that might be present in the dataset. For example, identifying and removing biased data points or re-weighting data points can help reduce the impact of skewed representations.

Furthermore, evaluating the model for potential biases is crucial. Techniques like fairness-aware metrics can be used to detect and quantify bias in model outputs, helping to identify and address potential issues. This careful consideration of bias throughout the entire process is vital for building fair and ethical AI systems.

Techniques for Bias Detection and Mitigation

Understanding the Types of Bias

Bias in data and algorithms can manifest in various forms, impacting the fairness and reliability of the results. Understanding the different types of bias, such as selection bias, confirmation bias, and algorithmic bias, is crucial for identifying and mitigating their effects. Selection bias occurs when the data used to train a model isn't representative of the overall population, leading to skewed results. Confirmation bias influences the data collection and analysis process, leading to skewed results and reinforcing pre-existing beliefs.

Algorithmic bias is embedded within the design of the algorithm itself, potentially perpetuating societal biases present in the data. Identifying these various forms of bias is the first step towards developing effective countermeasures.

Data Collection Methods and Evaluation

Careful consideration of data collection methods is essential to minimizing bias. Using diverse and representative datasets is paramount for ensuring the fairness and accuracy of the results. Methods like stratified sampling can help achieve this, ensuring that different demographic groups are adequately represented in the dataset. Rigorous evaluation of the data is equally important, checking for inconsistencies, outliers, and missing values that could skew the results.

Analyzing the data for patterns and anomalies can unveil potential biases in the data collection process, allowing for corrective measures to be taken.

Algorithmic Transparency and Explainability

Understanding how an algorithm arrives at a particular decision is crucial for detecting bias. Implementing algorithms with greater transparency and explainability allows for a deeper understanding of the decision-making process. This includes using interpretable models and providing clear explanations for the algorithm's predictions.

Techniques such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) can be employed to make the decision process more transparent, facilitating the identification and mitigation of bias.

Statistical Methods for Bias Detection

Statistical methods offer powerful tools for detecting and quantifying bias in datasets. Techniques like t-tests and ANOVA can compare different groups and reveal significant disparities. Statistical analysis can identify potential biases in the data distribution, allowing for adjustments or the exclusion of potentially biased data points.

Further analysis, such as correlation analysis and regression modeling, can uncover relationships between variables and potentially uncover underlying biases in these relationships.

Human Review and Auditing

Incorporating human review and auditing is crucial for ensuring the fairness and accuracy of the results. Human intervention can identify biases that automated systems might miss, allowing for a more holistic assessment. Regular audits of the data and algorithms can help to detect and address emerging biases over time, ensuring continuous improvement.

Thorough reviews of the outputs of the algorithms can highlight any unexpected patterns or inconsistencies that could indicate the presence of bias.

Ethical Considerations in Bias Mitigation

Addressing bias requires a strong ethical framework. Considering the potential impact on different groups is essential when designing and implementing solutions. Ethical guidelines should promote fairness and inclusivity in the development and deployment of algorithms.

Open discussion and collaboration with diverse stakeholders are critical for ensuring that the solutions are not only technically sound but also ethically responsible. Considering the potential impact on different groups is essential when designing and implementing solutions.

Continuous Monitoring and Evaluation

Bias detection is not a one-time process; it's an ongoing effort. Continuous monitoring and evaluation are essential to identify and address emerging biases. Regular assessments of the data and algorithms can identify new patterns and trends that suggest bias. These assessments can help to ensure that the systems remain fair and equitable over time.

This ongoing monitoring ensures that the solution remains effective and responsive to evolving societal needs and data trends. Feedback loops are essential to ensure the system continues to deliver fair and unbiased results.

The digital realm offers a captivating allure, connecting individuals across geographical boundaries and fostering unprecedented social interaction. The ability to communicate with loved ones, friends, and colleagues instantly, regardless of their location, is a powerful and transformative force. This ease of connection fuels a sense of global community, allowing us to share experiences and perspectives in real-time. Furthermore, access to information and resources is exponentially expanded, empowering individuals with knowledge and opportunities previously unavailable.

The Future of Inclusive Entertainment: A Collaborative Approach

Fostering Representation

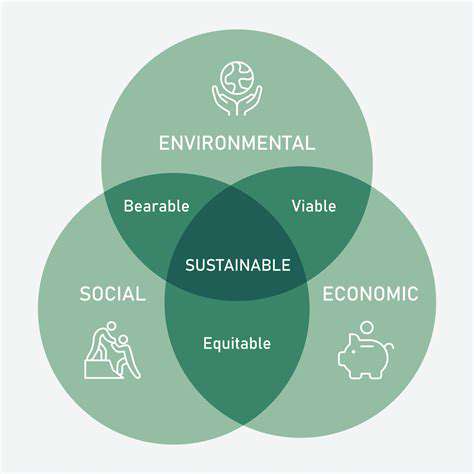

A truly inclusive future for entertainment demands a conscious effort to represent diverse voices, perspectives, and experiences on screen and stage. This goes beyond simply tokenistic casting; it necessitates a deep understanding of the nuances of different cultures, identities, and lived realities. We need to move beyond stereotypes and clichés, creating characters and narratives that are authentic, complex, and relatable to a wide range of audiences. This involves actively seeking out and supporting creators from underrepresented backgrounds, ensuring their stories are heard and given the platform they deserve.

This isn't just about diversity as a buzzword. It's about recognizing the inherent value in a multitude of stories, and giving space for those stories to thrive. Only when we embrace this kind of representation can we truly foster understanding and empathy amongst audiences, and ultimately, create a more just and equitable society.

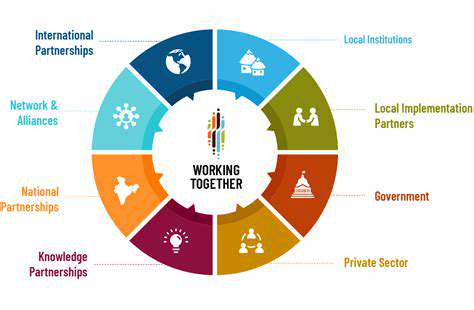

Cultivating Collaboration

The future of inclusive entertainment isn't about one entity doing it alone. It's a collaborative effort involving creators, producers, actors, writers, and audiences. Open dialogue, constructive feedback, and a genuine willingness to learn from each other are essential components of this collaborative approach. Meaningful conversations need to occur between diverse groups, fostering an environment where everyone feels empowered to contribute their unique perspectives.

Prioritizing Accessibility

Accessibility isn't just about physical accessibility; it encompasses a broader range of needs and preferences. Inclusive entertainment must consider the diverse needs of audiences, including those with disabilities, different language backgrounds, and varying levels of cultural understanding. This means ensuring subtitles and audio descriptions are readily available, providing content in multiple languages, and actively seeking feedback from diverse audiences to understand their needs and preferences.

Addressing Bias in Storytelling

Implicit biases often seep into storytelling, shaping characters, narratives, and even the very way stories are told. To create truly inclusive entertainment, it's crucial to actively identify and challenge these biases. This requires a critical examination of scripts, casting choices, and the overall narrative structure to ensure they are not perpetuating harmful stereotypes or marginalizing certain groups. A commitment to continuous self-reflection and education is paramount in this process.

Promoting Critical Consumption

Ultimately, the responsibility for fostering inclusive entertainment rests not only with the creators but also with the consumers. Developing critical viewing habits and actively engaging with diverse perspectives can contribute significantly to fostering inclusivity in the entertainment industry. Challenging stereotypes, supporting diverse voices, and actively seeking out content that reflects a variety of experiences are key components of this approach. By demanding more from the entertainment we consume, we can encourage a more inclusive and equitable future for all.